-

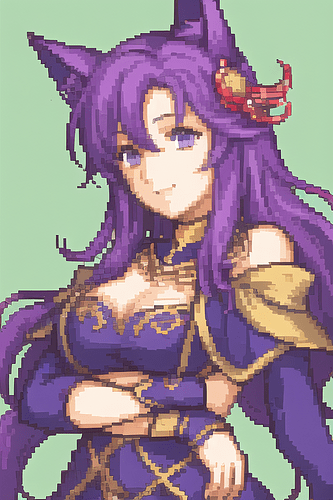

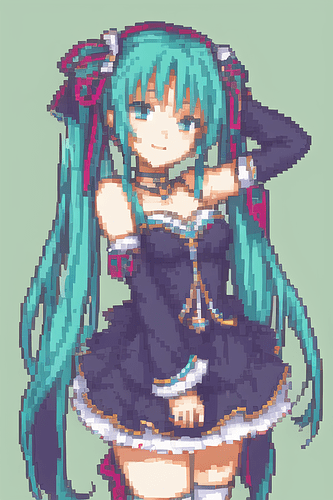

First, let the AI generate a variety of data.

If it generates something interesting, you can share it online anyway.

Even if you don’t create a portrait, if you share the data, someone may be able to make it work in a game.

-

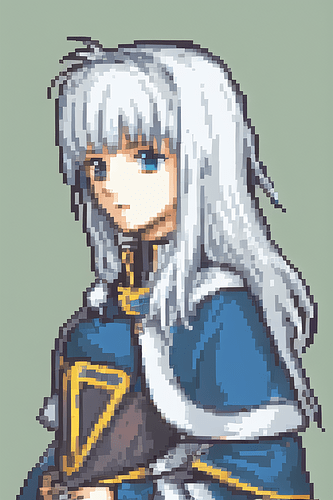

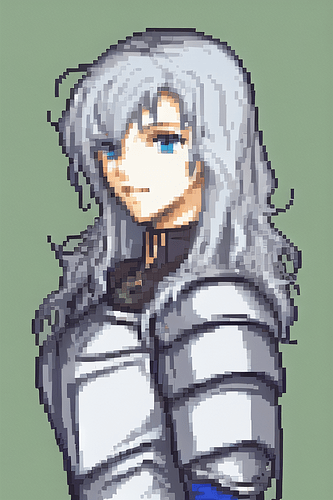

First, match the face size of the AI-generated picture with the game character.

This is because if you reduce the color first, you will lose out on the color of the clothing parts.

In vanilla, the WIDTH is 96 dots, but resizing it to about 100 dots should be just fine.

When centering the face, trim off the margins.

-

Once the face is sized, cut it out to the specified 96x80 size.

-

Reduce the number of colors to 16.

It may be more efficient to reduce the number of colors to 15 and repaint the background color.

This is because the background color may be used for eyes or clothing.

I got pretty good results when I used padie to reduce the colors.

https://www.vector.co.jp/soft/win95/art/se063024.html

This software is very old, developed in the windows95 era.

The UI can be switched to English.

Default support is bmp only (png can be loaded with an extension plugin).

-

If you have reduced the number of colors to 15, repaint the background in the 16th palette.

-

Repaint any imperfections in the black border.

It is easier to do this with a tool that has a bordering function, such as edge.

Dotted dots look better when bordered with black to create a sharp contrast between light and dark areas.

-

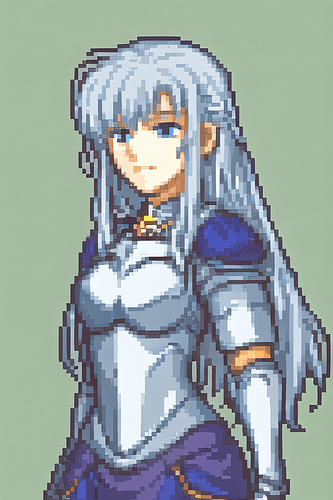

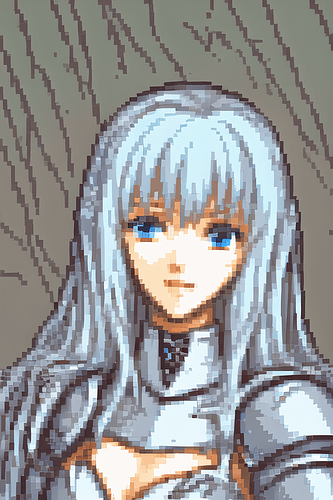

From here, we will create a 128x112 sheet, which is the original size.

We will need a mouth frame and eye frames, but for now, import the sheet into the game and check the size of the frames.

If you make a mistake in the size of the face, you will have to redo the following steps.

First, please check if the size of the face is correct by inserting the current data into the game and having Seth talk to it.

-

Create a frame for the mouth and eyes.

It would be easier to draw them by referring to existing portrait frames that are similar to vanilla.

(I wish I could create these in AI as well.)

-

The correct mouth and eye frames can be checked in FEBuilderGBA, although the game can also be used to check if the mouth and eye frames are correct.

-

When you are satisfied with the product, make a minimug to complete the process.

-

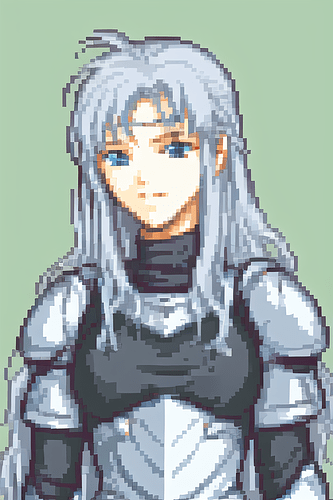

Please contribute to the comminity while showing off your work by submitting it to REPO.

Better to share than to monopolize!

It is very tedious to match the face size, the 16 color limit, and to frame the eyes and mouth.

It would be much easier if a tool or system could be developed to assist in this area.

Apparently Klokinator is making something better.

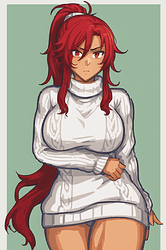

AI also excels at painting landscapes.

I am color reducing some BGs so that they can be used in GBAFE.

The AI will also allow you to create nice single-picture cut-in scenes.

However, to seriously stabilize the drawing of the characters, it will be necessary to take some time and effort to fix the characters by learning with LORA.

If you can draw, you can use the AI-generated data as a basis for reworking it to make it better, or you can use it as a reference for composition.

It is good to think of AI as an assistant, not an enemy.

This AI technology has been advancing at several times the rate of dog years since it was released last summer.

New technology is being released to the public every month.

I believe that by using technology well, we can do more interesting things.